The Crash at Crush is a perennial go-to narrative in the long-running effort to goad disinterested students into obtaining a much-needed grasp of the the principles of classical mechanics.

From the Wikipedia:

Crush, Texas, was a temporary “city” established as a one-day publicity stunt in 1896. William George Crush, general passenger agent of the Missouri-Kansas-Texas Railroad (popularly known as the Katy), conceived the idea to demonstrate a train wreck as a spectacle. No admission was charged, and train fares to the crash site were at the reduced rate of US$2 from any location in Texas. As a result about 40,000 people showed up on September 15, 1896, making the new town of Crush, Texas, temporarily the second-largest city in the state.

It seems that William George Crush either failed (or more likely never enrolled) in Physics 101. The energy released from the impact of the trains and the explosion of their boilers led to several deaths and many injuries among the 40,000 spectators.

Fast-forwarding 118 years, we find that Stefano “Doc” Meschiari, another Texas entrepreneur, has once again harnessed physics in the name of spectacle with his browser-based video game Super Planet Crash. (Name changed at the last moment from Super Planet Crush in order to duck potential legal challenges from the recently IPO’d purveyors of Candy Crush).

In the time-honored tradition of stoking publicity, a press release was just issued:

April 7, 2014

Contact: Tim Stephens (831) 459-2495; stephens@ucsc.eduOrbital physics is child’s play with Super Planet Crash

A new game and online educational resources are offshoots of the open-source software package astronomers use to find planets beyond our solar system

For Immediate Release

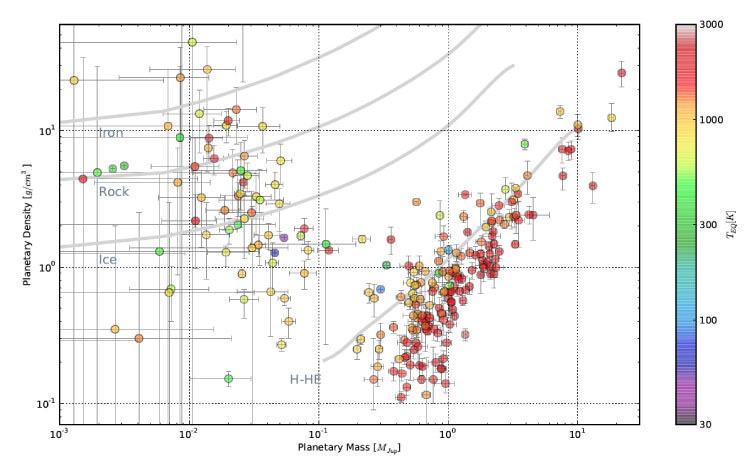

SANTA CRUZ, CA–Super Planet Crash is a pretty simple game: players build their own planetary system, putting planets into orbit around a star and racking up points until they add a planet that destabilizes the whole system. Beneath the surface, however, this addictive little game is driven by highly sophisticated software code that astronomers use to find planets beyond our solar system (called exoplanets).

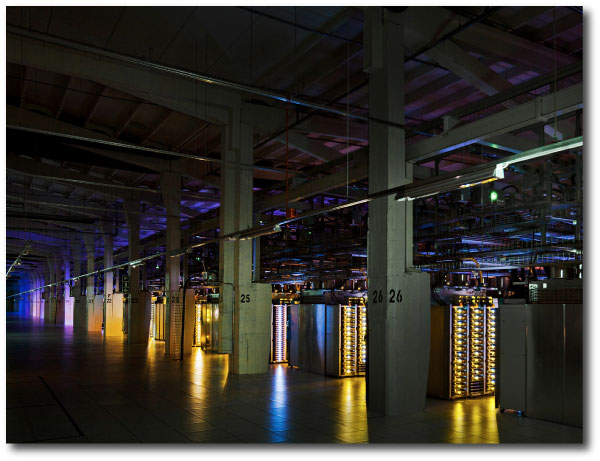

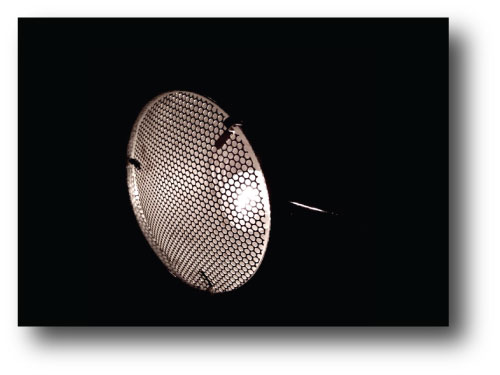

The release of Super Planet Crash (available online at www.stefanom.org/spc) follows the release of the latest version of Systemic Console, a scientific software package used to pull planet discoveries out of the reams of data acquired by telescopes such as the Automated Planet Finder (APF) at the University of California’s Lick Observatory. Developed at UC Santa Cruz, the Systemic Console is integrated into the workflow of the APF, and is also widely used by astronomers to analyze data from other telescopes.

Greg Laughlin, professor and chair of astronomy and astrophysics at UC Santa Cruz, developed Systemic Console with his students, primarily Stefano Meschiari (now a postdoctoral fellow at the University of Texas, Austin). Meschiari did the bulk of the work on the new version, Systemic 2, as a graduate student at UC Santa Cruz. He also used the Systemic code as a foundation to create not only Super Planet Crash but also an online web application (Systemic Live) for educational use.

“Systemic Console is open-source software that we’ve made available for other scientists to use. But we also wanted to create a portal for students and teachers so that anyone can use it,” Laughlin said. “For the online version, Stefano tuned the software to make it more accessible, and then he went even further with Super Planet Crash, which makes the ideas behind planetary systems accessible at the most visceral level.”

Meschiari said he’s seen people quickly get hooked on playing the game. “It doesn’t take long for them to understand what’s going on with the orbital dynamics,” he said.

The educational program, Systemic Live, provides simplified tools that students can use to analyze real data. “Students get a taste of what the real process of exoplanet discovery is like, using the same tools scientists use,” Meschiari said.

The previous version of Systemic was already being used in physics and astronomy classes at UCSC, Columbia University, the Massachusetts Institute of Technology (MIT), and elsewhere, and it was the basis for an MIT Educational Studies program for high school teachers. The new online version has earned raves from professors who are using it.

“The online Systemic Console is a real gift to the community,” said Debra Fischer, professor of astronomy at Yale University. “I use this site to train both undergraduate and graduate students–they love the power of this program.”

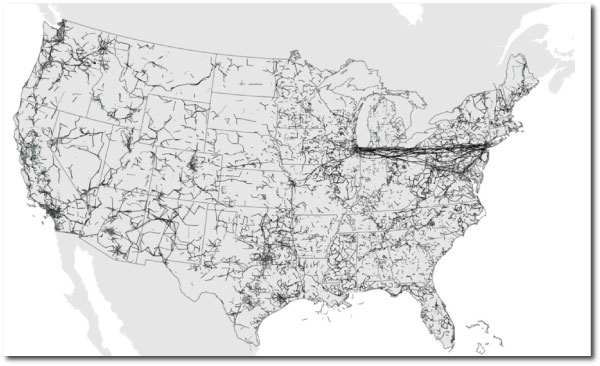

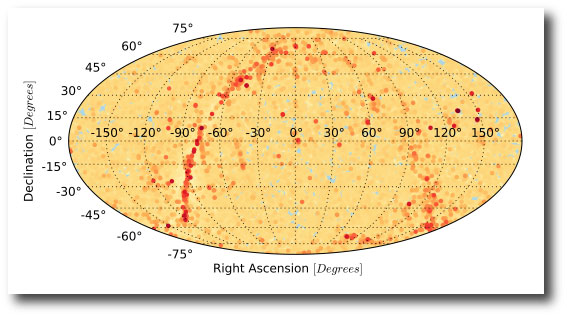

Planet hunters use several kinds of data to find planets around other stars. Very few exoplanets have been detected by direct imaging because planets don’t produce their own light and are usually hidden in the glare of a bright star. A widely used method for exoplanet discovery, known as the radial velocity method, measures the tiny wobble induced in a star by the gravitational tug of an orbiting planet. Motion of the star is detected as shifts in the stellar spectrum–the different wavelengths of starlight measured by a sensitive spectrometer, such as the APF’s Levy Spectrometer. Scientists can derive a planet’s mass and orbit from radial velocity data.

Another method detects planets that pass in front of their parent star, causing a slight dip in the brightness of the star. Known as the transit method, this approach can determine the size and orbit of the planet.

Both of these methods rely on repeated observations of periodic variations in starlight. When multiple planets orbit the same star, the variations in brightness or radial velocity are very complex. Systemic Console is designed to help scientists explore and analyze this type of data. It can combine data from different telescopes, and even different types of data if both radial velocity and transit data are available for the same star. Systemic includes a large array of tools for deriving the orbital properties of planetary systems, evaluating the stability of planetary orbits, generating animations of planetary systems, and performing a variety of technical analyses.

“Systemic Console aggregates data from the full range of resources being brought to bear on extrasolar planets and provides an interface between these subtle measurements and the planetary systems we’re trying to find and describe,” Meschiari said.

Laughlin said he was struck by the fact that, while the techniques used to find exoplanets are extremely subtle and difficult, the planet discoveries that emerge from these obscure techniques have generated enormous public interest. “These planet discoveries have done a lot to create public awareness of what’s out there in our galaxy, and that’s one reason why we wanted to make this work more accessible,” he said.

Support for the development of the core scientific routines underlying the Systemic Console was provided by an NSF CAREER Award to Laughlin.