Pe·dan·tic narrowly, stodgily, and often ostentatiously learned — a pedantic blog poster.

Man, that word hits kinda close to home. At any rate, in the usual vein, and at the risk of being pedantic, I’ll take the opportunity to point out that one gets maximum bandwidth if one transfers data via a physical medium.

The Microsoft Azure Data Box cloud solution lets you send terabytes of data into and out of Azure in a quick, inexpensive, and reliable way. The secure data transfer is accelerated by shipping you a proprietary Data Box storage device. Each storage device has a maximum usable storage capacity of 80 TB and is transported to your datacenter through a regional carrier. The device has a rugged casing to protect and secure data during the transit.

Microsoft Azure Documentation

The sneakernet principle comes up regularly in astronomy. Basically, the idea is that something is ejected (either purposefully or by a natural process) and then delivered to our Solar System. Panspermia. ISOs. Smashed-up Dyson Spheres. Flying Saucers. In the Desch-Jackson theory for ‘Oumuamua, shards of nitrogen ice are chipped off exo-Plutos and forge their lonely way across the interstellar gulfs to the Solar System.

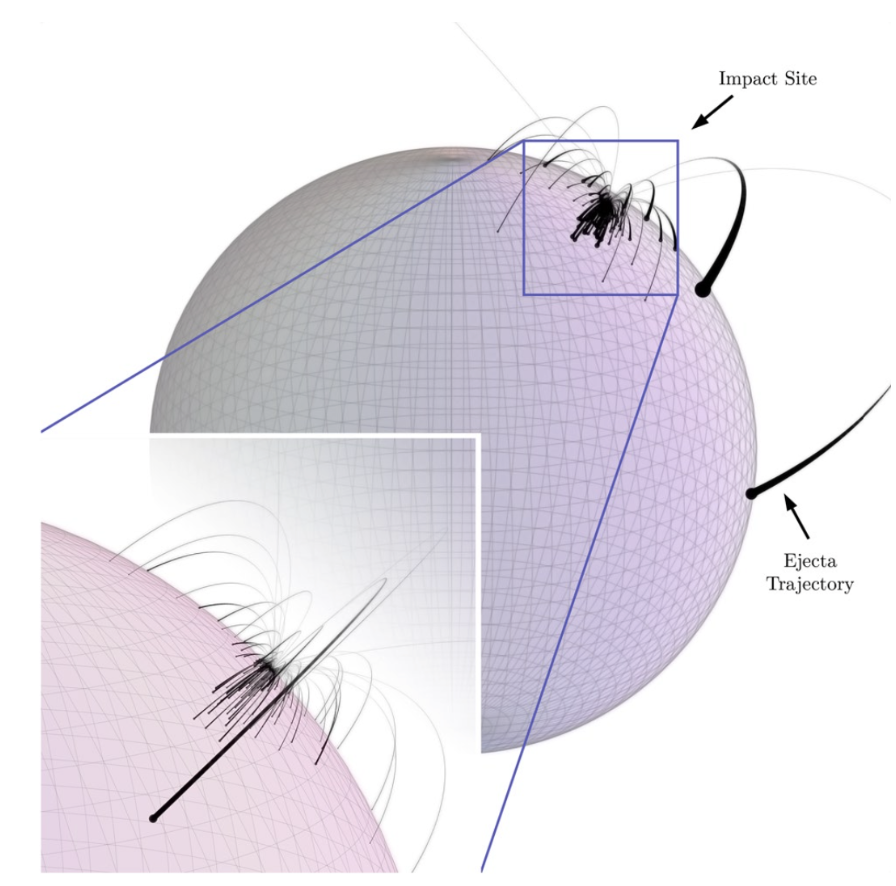

Simulation of an impact on the nitrogen glacier of an exo-Pluto.

In the case of CNEOS 2014-01-08, several sneakernet or sneakernet-adjacent theories have been proposed. In a recent example, it is posited that rocky planets undergo tidal disruption upon close encounters with dense M-dwarf stars. (At the risk of being pedantic, it’s enjoyable to point out that Proxima Centauri is five times denser than lead, thereby packing a considerable tidal punch). Following the tidal shredding induced by the encounter, wayward planetary debris is then sprayed out into the galaxy. Some of it eventually winds up on the ocean floor to be dredged up on a magnetic sled.

The foregoing activity, along with Jensen Huang’s recent comments about using galaxies and planets and stars to power computation, prompt me to pick my hat back up and throw it in the sneakernet ring. The stars themselves act as the computers! A sparkling of the planned-obsolesced debris eventually gets recycled into primitive meteorites. A crack team of cosmo-chemists concludes that the low-entropy material they’ve been puzzling over in a recently recovered carbonaceous chondrite is best explained as … Now look, this is all much to outre for the sober academic literature, but it’s nothing if not aspirationally extravagant, even if the odds of it working out are (liberally?) estimated at one part in ten to the eight. Here’s the paper (unpublished, of course) and here’s the abstract.

If global energy energy expenditures for artificial irreversible computation continue to increase a the current rate, the required power consumption will exceed the power consumption of the biosphere in less than a century. This conclusion holds, moreover, even with the assumption that all artificial computation proceeds with optimal thermodynamic efficiency. Landauer’s limit for the minimum energy, Emin = (ln 2) k T, associated with an irreversible bit operation thus provides a physical constraint on medium-term economic growth, and motivates a theoretical discussion of computational “devices” that utilize astronomical resources. A remarkably attractive long term possibility to significantly increase the number of bit operations that can be done would be to catalyze the outflow from a post-main-sequence star to produce a dynamically evolving structure that carries out computation. This paper explores the concept of such astronomical-scale computers and outlines the corresponding constraints on their instantiation and operation. We also assess the observational signature of these structures, which would appear as luminous (L ~ 1000 L_sun) sources with nearly blackbody spectral energy distributions and effective temperatures T = 150 - 200 K. Possible evidence for past or extant structures may arise in pre-solar grains within primitive meteorites, or in the diffuse interstellar absorption bands, both of which could display anomalous entropy signatures.