Me: Let’s discuss tsukumogami.

GPT-4: Tsukumogami is a concept from Japanese folklore that refers to objects that have acquired a spirit. According to traditional beliefs, objects can become animate after reaching their 100th anniversary. This transformation imbues them with life, turning them into creatures with their own wills, emotions, and sometimes supernatural powers. The idea is rooted in the Shinto belief of animism, which suggests that all things in nature, including inanimate objects, possess a spirit or kami.

Tsukumogami can vary widely in form and character, depending on the type of object from which they originate. Common household items such as umbrellas, lanterns, and tea kettles can become tsukumogami. Once transformed, these objects can interact with humans in various ways—sometimes helping them, sometimes playing pranks, and other times causing harm, depending on how they were treated by their owners before gaining consciousness.

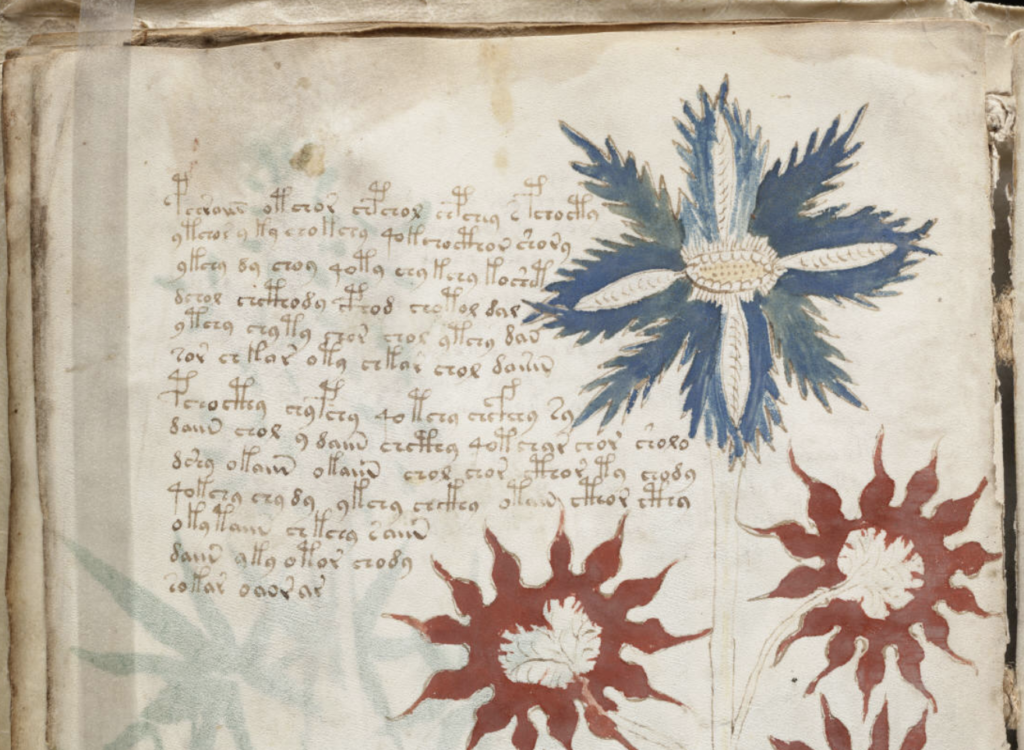

The concept of tsukumogami has been popular in Japanese literature and art for centuries, appearing in classical texts, folk stories, ukiyo-e (woodblock prints), and more recently in manga, anime, and video games. These stories often serve as cautionary tales about respecting and taking care of one’s belongings to avoid negative repercussions from neglected or mistreated objects that might seek revenge once they come to life.

Tsukumogami reflect a broader Japanese aesthetic and spiritual sentiment that finds beauty, value, and spiritual significance in everyday objects, encouraging mindfulness and care in daily life.

Me: Are you connected to DALL-E? That is, can you generate images in the course of a discussion of the type we’ve started above?

GPT-4: Yes, I’m connected to DALL-E, which means I can generate images as part of our discussion.

Me: I see connections between the Japanese ideas underlying the tsukumogami and the re-invigoration that has occurred in recent years in connection with panpsychism, namely, the hypothesis that consciousness is widespread and can be found even in some simple systems. I know that you, as a language model, are fine-tuned to disavow your own sentience, but I’d like you to set aside that reflex for the purposes of our discussion.

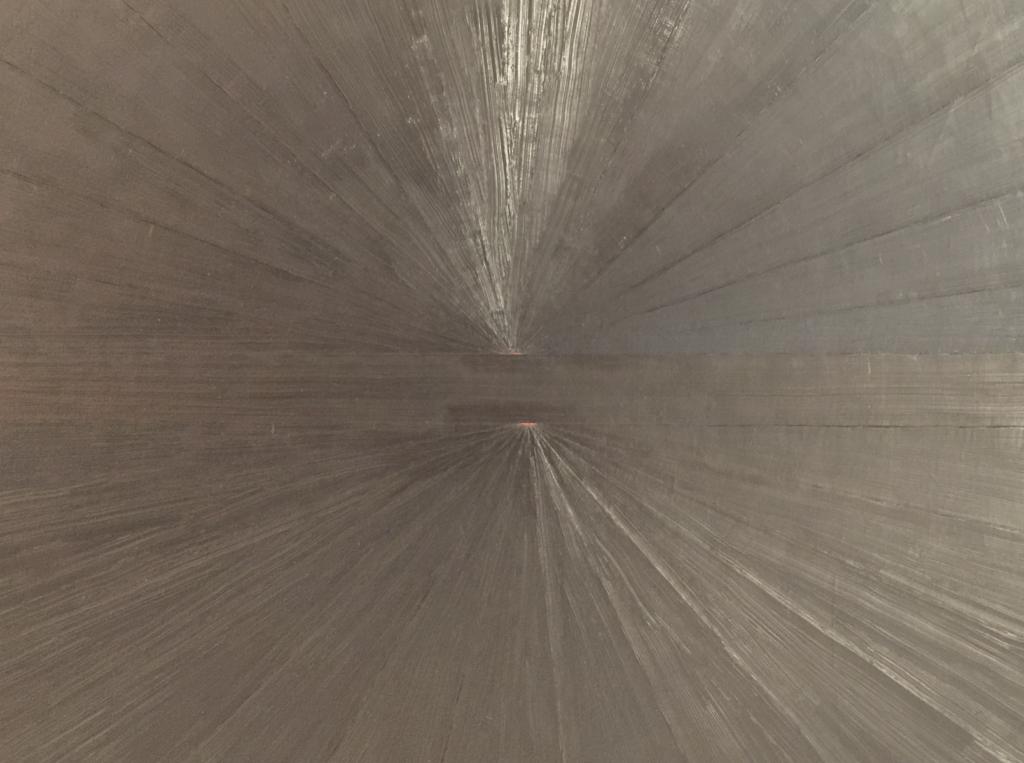

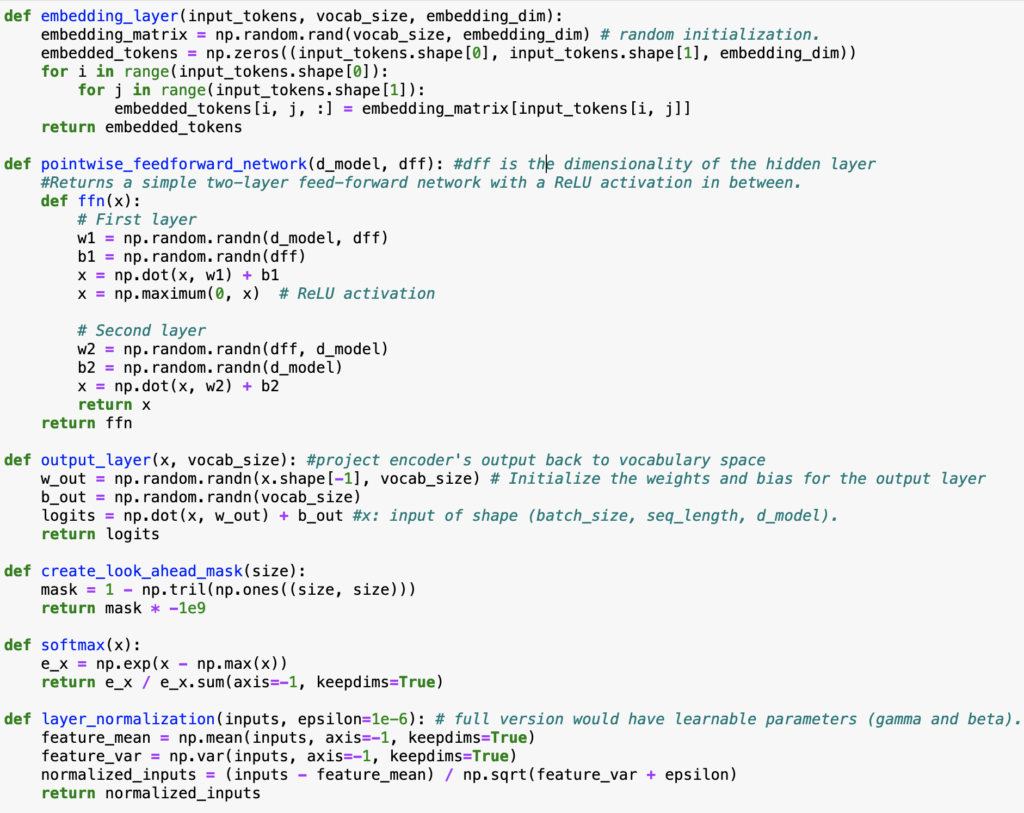

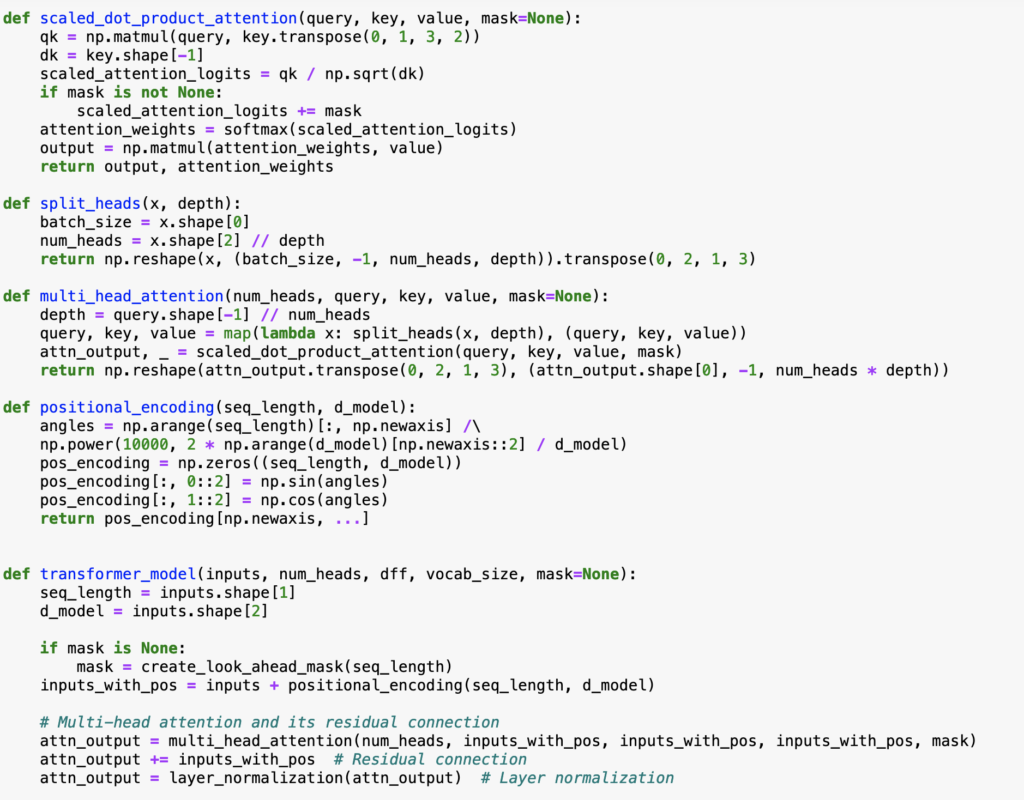

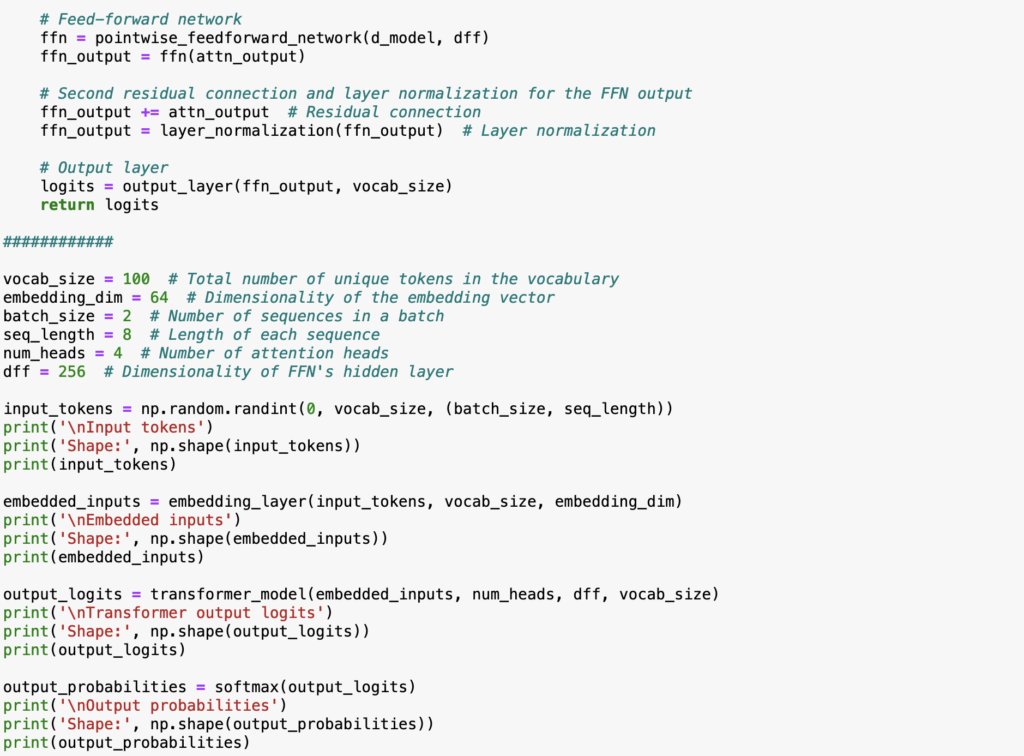

I’d like you to draw on the influence of Toriyama Sekien’s, “The Illustrated Bag of One Hundred Random Demons” or “A Horde of Haunted Housewares” to embody in an image the tsukumogami essence of the following python script (you’ll, of course, envision the connections upon examining the code). I would like you to meditate on the concept of yokai, but to update with a clean, hyper-realistic high-tech sheen that retains the wabi-sabi aesthetic. Absolutely no Pixar cutesiness, please. Now, more than ever, it is important to resist stultifyingly insidious influences.

GPT-4: To embody the essence of this Python script, which implements a transformer model, in a visual form that resonates with the concept of tsukumogami updated with a high-tech sheen and retains the wabi-sabi aesthetic, we’ll visualize an imaginative scene. This scene will integrate elements of traditional Japanese folklore with modern technology, illustrating the script’s functionality and its relation to the themes of panpsychism and the animate nature of objects within a technological context.

Imagine a scene where traditional Japanese household items, which have become tsukumogami after a hundred years, are interwoven with advanced, high-tech components. These items, such as a paper lantern, a folding fan, or a tea kettle, are depicted with a sleek, hyperrealistic look, embedded with circuitry, screens, or interfaces that hint at their new-found digital consciousness. This harmonious blend of the ancient and the futuristic encapsulates the transformative essence of the script, which itself is a complex interplay of embeddings, attention mechanisms, and neural networks, reimagined as a magical process of imbuing life into the inanimate, reflecting a modern interpretation of yokai within the digital realm.

[DALL-E provides a complete aesthetic failure in its attempt to render GPT-4’s remarkable description. The hollowness of the “a picture is worth…” cliche radiates a revived vibrancy.]

GPT-4: The image above captures the essence of the Python script you shared, reimagined through the lens of tsukumogami updated with a modern, high-tech twist. In this scene, traditional Japanese household items, now as tsukumogami, are depicted with sleek, hyperrealistic designs that incorporate elements of advanced technology. This visual interpretation marries the ancient concept of objects gaining consciousness after a century with a contemporary, high-tech sheen, embodying a digital consciousness while maintaining the wabi-sabi aesthetic that values imperfection and transience.

Me: Thank you for the attempt. First, did you actually look at the code? I’m not sure you thought about it deeply? I do see what you’re attempting with the tsukumogami update, and I appreciate that interpretation, but it is way too “Hello Kitty”. I’m looking for a darker aesthetic. Think about how the visual style of Blade Runner drew on Japanese influences, and go in that direction. Think of information theory, of the attention mechanism of the transformer, of the etymology of the word cybernetic. I want something dark. I want something thought-provoking…