Most oklo.org readers know the story line of Fred Hoyle’s celebrated 1957 science fiction novel, The Black Cloud. An opaque, self-gravitating mass of gas and dust settles into the solar system, blots out the sun, and wreaks havoc on the biosphere. It gradually becomes clear that the cloud itself is sentient. Scientists mount an attempt to communicate. A corpus of basic scientific and mathematical principles is read out loud in English, voice-recorded, and transmitted by radio to the cloud.

The policy was successful, too successful. Within two days the first intelligible reply was received. It read:

“Message received. Information slight. Send more.”

For the next week almost everyone was kept busy reading from suitably chosen books. The readings were recorded and then transmitted. But always, there came short replies demanding more information, and still more information…

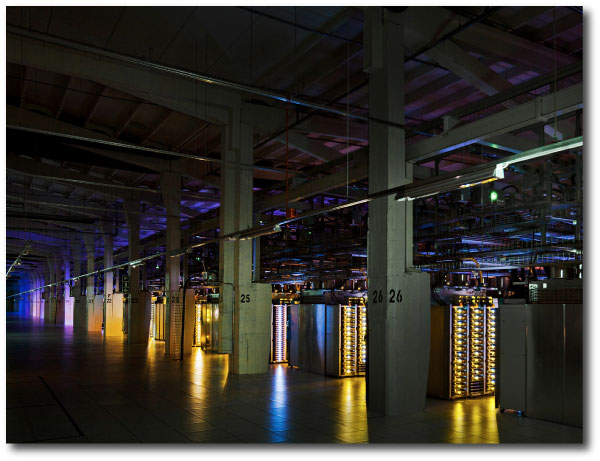

Sixty years later, communicating interstellar clouds are still in the realm of fiction, but virtualized machines networked in the cloud are increasingly dictating the course of actions in the real world.

In Hoyle’s novel, the initial interactions with the Black Cloud are quite reminiscent of a machine learning task. The cloud acts as a neural network. Employing the information uploaded in the training set, it learns to respond to an input vector — a query as a sequence of symbols — with a sensible output vector. Throughout the story, however, there’s an implicit assumption that the Cloud is self-conscious and aware; nowhere is it intimated that that the processes within the Cloud might simply be an algorithm managing to pass an extension of the Turing Test. On the basis of the clear quality of its output vectors, the Cloud’s intelligence is taken as self-evident.

The statistics-based regimes of machine learning are on a seemingly unstoppable roll. A few years ago, I noticed that Flickr became oddly proficient at captioning photographs. Under the hood, an ImageNet classification with convolutional neural networks (or the like) was suddenly focused, with untiring intent, on scenes blanketing the globe. Human mastery of the ancient game of Go has been relinquished. Last week, I was startled to read Andrej Karpathy’s exposition of the unreasonable effectiveness of recurrent neural networks.

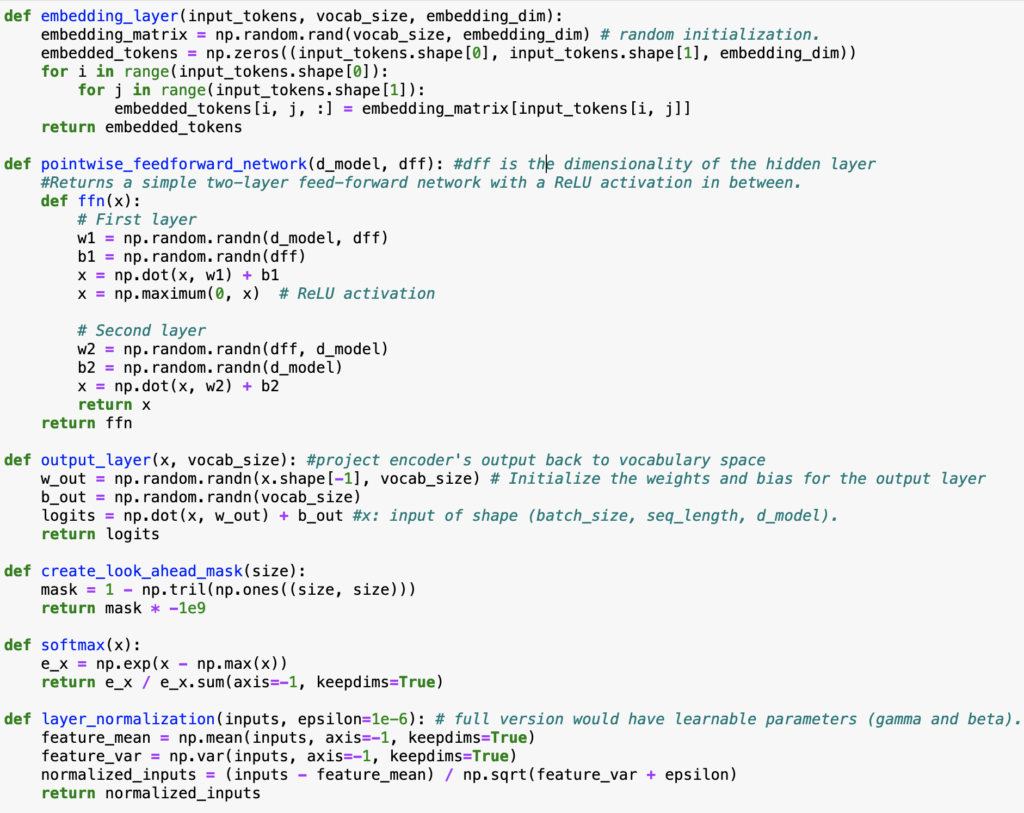

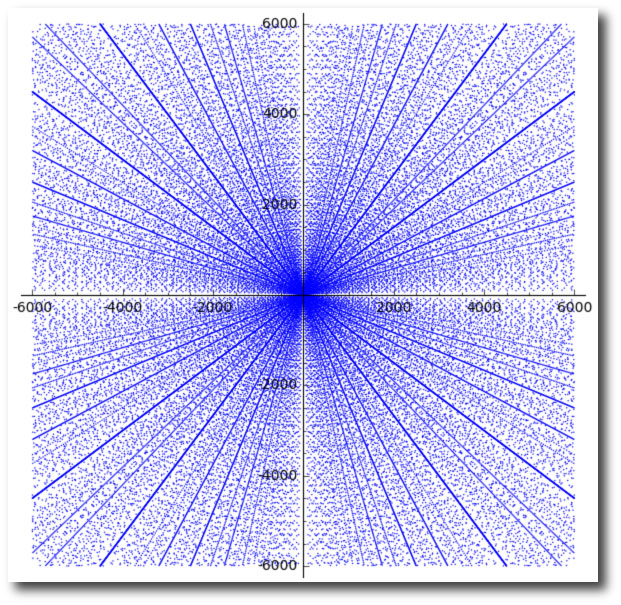

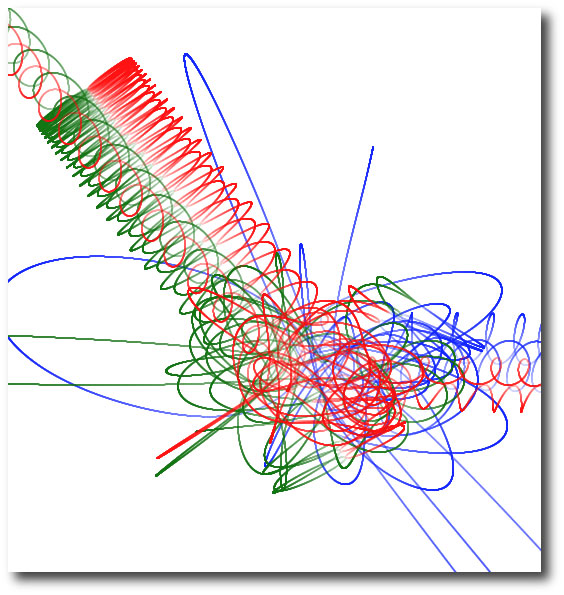

By drawing from a large mass of example text, a recurrent neural network (RNN) character-level language model learns to generate new text one character at a time. Each new letter, space, or punctuation mark draws its appearance from everything that has come before it in the sequence, intimately informed by what the algorithm has absorbed from its fund of information. As to how it really works, I’ll admit (as well) to feeling overwhelmed, to not quite knowing where to begin. This mind-numbingly literal tutorial on backpropagation is of some help. And taking a quantum leap forward, Justin Johnson has written a character-level language model, torch-rnn, which is well-documented and available on github.

In Karpathy’s post, RNNs are set to work generating text that amuses but which nonetheless seems reassuringly safely removed from any real utility. A Paul Graham generator willingly dispenses Silicon Valley “thought leader” style bon mots concerning startups and entrepreneurship. All of Shakespeare is fed into the network and dialogue emerges in an unending stream that’s — at least at the phrase-to-phrase level — unkindly indistinguishable from the real thing.

I’m very confident that it would be a whole lot more enjoyable to talk to Oscar Wilde than to William Shakespeare. As true A.I. emerges, it may do so in a cloud of aphorisms, of which Wilde was the undisputed master, “I can resist everything except temptation…”

Wilde employed a technique for writing The Picture of Dorian Gray in which he first generated piquant observations, witty remarks and descriptive passages, and then assembled the plot around them. This ground-up compositional style seems somehow confluent with the processes — the magic — that occurs in an RNN.

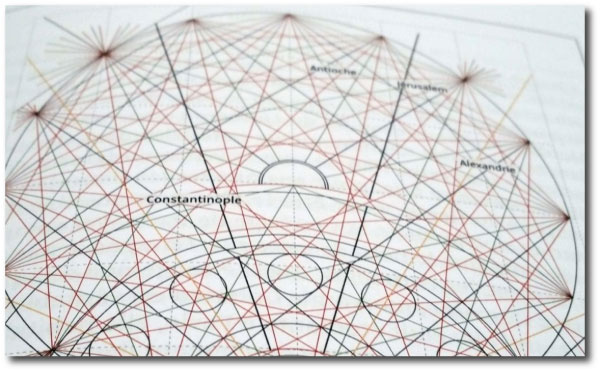

The uncompressed plain text UTF8 version of Dorian Gray is a 433701 character sequence. This comprises a fairly small training set. It needs a supplement. The obvious choice to append to the corpus is A rebours — Against Nature, Joris-Karl Huysman’s 1884 classic of decadent literature.

Even more than Wilde’s text, A rebours is written as a series of almost disconnected thumbnail sketches, containing extensive, minutely inlaid descriptive passages. The overall plot fades largely into the background, and is described, fittingly, in one of the most memorable passages from Dorian Gray.

It was a novel without a plot and with only one character, being, indeed, simply a psychological study of a certain young Parisian who spent his life trying to realize in the nineteenth century all the passions and modes of thought that belonged to every century except his own, and to sum up, as it were, in himself the various moods through which the world-spirit had ever passed, loving for their mere artificiality those renunciations that men have unwisely called virtue, as much as those natural rebellions that wise men still call sin. The style in which it was written was that curious jewelled style, vivid and obscure at once, full of argot and of archaisms, of technical expressions and of elaborate paraphrases, that characterizes the work of some of the finest artists of the French school of Symbolistes. There were in it metaphors as monstrous as orchids and as subtle in colour.

A rebours attached to Dorian Gray constitutes a 793587 character sequence, and after some experimentation with torch-rnn, I settled on the following invocation to train a multilayer LSTM:

MacBook-Pro:torch-rnn Greg$ th train.lua -gpu -1 -max_epochs 100 -batch_size 1 -seq_length 50 -rnn_size 256 -input_h5 data/dorianGray.h5 -input_json data/dorianGray.json

My laptop lacks an Nvidia graphics card, so the task fell to its 2.2 GHz Intel Core i7. The code ran for many hours. Lying in bed at night in the quiet, dark house, I could hear the fan straining to dissipate the heat from the processor. What would it write?

This morning, I sat down and sampled the results. The neural network that emerged from the laptop’s all-nighter generates Wilde-Huysmans-like text assembled one character at a time:

MacBook-Pro-2:torch-rnn Greg$ th sample.lua -gpu -1 -temperature 0.5 -checkpoint cv/checkpoint_1206000.t7 -length 5000 > output.txt

I opened the output, and looked over the first lines. It is immediately clear that a 2015-era laptop staying on all night running downloaded github code can offer no competition — in any sense — to either Mr. Wilde or Mr. Huysmans. An abject failure of the Turing Test, a veritable litany of nonsense:

After the charm of the thread of colors, the nineteenth close to the man and passions and cold with the lad's heart in a moment, whose scandal had been left by the park, or a sea commonplace plates of the blood of affectable through the club when her presence and the painter, and the certain sensation of the capital and whose pure was a beasts of his own body, the screen was gradually closed up the titles of the black cassion of the theatre, as though the conservatory of the past and carry, and showing to me the half-clide of which it was so as the whole thing that he would not help herself. I don't know what will never talk about some absorb at his hands.

But we are not more than about the vice. He was the cover of his hands. "You were in his brain."

"I was true," said the painter was strangled over to us. It is not been blue chapter dreadfully confesses in spite of the table, with the desert of his hands in her vinations, and he mean about the screen enthralled the lamp and red books and causes that he was afraid that he could see the odious experience. It was a perfect streating top of pain.

"What is that, I am sorry I shall have something to me that you are not the morning, Mr. Gray," answered the lad, and that the possession of colorings, which were the centre of the great secrets of an elaborate curtain.

You cannot believe that I was thinking of the moon.

He was to be said that the world is the restive of the book to the charm of a matter of an approvingian through a thousand serviced it again. The personality of the senses by the servants were into the shadow of the next work to enter, and he had revealed to the conservatory for the morning with his wife had been an extraordinary rooms that was always from the studio in his study with a strange full of jars, and stood between them, or thought who had endured to know what it is.

"Ah, Mr. Gray?"

"I am a consolation to be able to give me back to the threat me."

But such demands are excessive. The text is readable English, convened in a headlong rush by a program that could just as easily have been synthesizing grant proposals or algebraic topology. Torch-rnn contains no grammar rules, no dictionaries, no guides to syntax. And it really does learn over time. Looking at the early checkpoint snapshots of the network, during epochs when words and spaces are forming, before any sense of context has emerged, one finds only vaguely English-like streams of gibberish:

pasticite his it him. "It him to his was paintered the cingring the spure, and then the sticice him come and had to him for of a was to stating to and mome am him himsed at he some his him, and dist him him in on of his lime in stainting staint of his listed."

Perhaps the best comparison of Torch-rnn’s current laptop-powered overnight-effort capabilities are to William S. Burroughs’ cut-up novels — The Soft Machine, The Ticket that Exploded — where one sees disjoint masses of text full of randomized allusions, but where an occasional phrase sparkles like a diamond in matrix, “…a vast mineral consciousness near absolute zero thinking in slow formations of crystal…”

In looking over a few thousand characters of text, generated from checkpoint 1,206,000 at temperature T=0.61, one finds glimmers of recurrent, half-emerged truths,

You are sure to be a fragrant friend, a soul for the emotions of silver men.