Writer’s block. Procrastination. Envy of those for whom words flow smoothly! Luxurious blocks of text. Paragraphs, Essays, Books.The satisfying end results of productivity made real.

Or, as Dorothy Parker put it, “I hate writing, I love having written.”

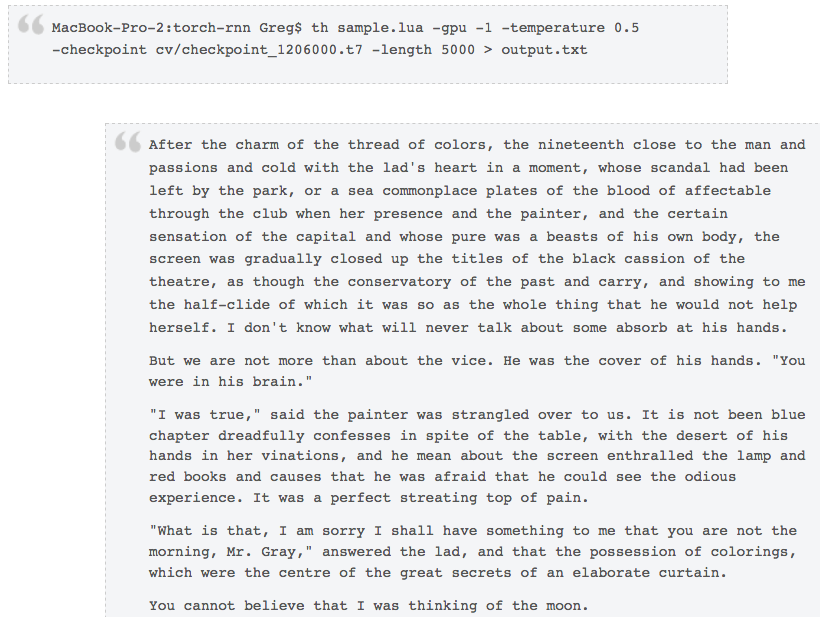

Over the past few years, this dynamic has kept me both keenly and uneasily interested in natural language generation — the emerging ability of computers to produce coherent prose. In a post that went up just under four years ago, I reported on experiments that used Torch-rnn to write in the vein of Oscar Wilde and Joris-Karl Huysmans, the acknowledged masters of the decadent literary style. A splendidly recursive quote from the Picture of Dorian Gray has Wilde describing the essence of Huysmans’ A Rebours.

Based on a 793587-character training set composed of the two novels, 2017-era laptop-without-a-GPU level language modeling — which worked by predicting the next character in sequence, one after another — could channel the aesthetic of décadence for strings of several words in a row, and could generate phrases, grammar and syntax more or less correctly. But there was zero connection from one sentence to the next. The results were effectively gibberish. Disappointing.

In the interim, progress in machine writing has been accelerating. Funding for artificial intelligence research is ramping up. Last year, a significant threshold was achieved by GPT-3, a language model developed and announced by OpenAI. The model contains 175 billion parameters and was trained (at a cost of around $4.6M) on hundreds of billions of words of English-language text. It is startlingly capable.

A drawback to GPT-3 is that it’s publicly available only through awkward, restrictive interfaces, and it can’t be fine-tuned in its readily available incarnations. “A.I. Dungeons” anyone? But its precursor model, the 2019-vintage GPT-2, which contains a mere 1.5 billion parameters, is open source and python wrappers for it are readily available.

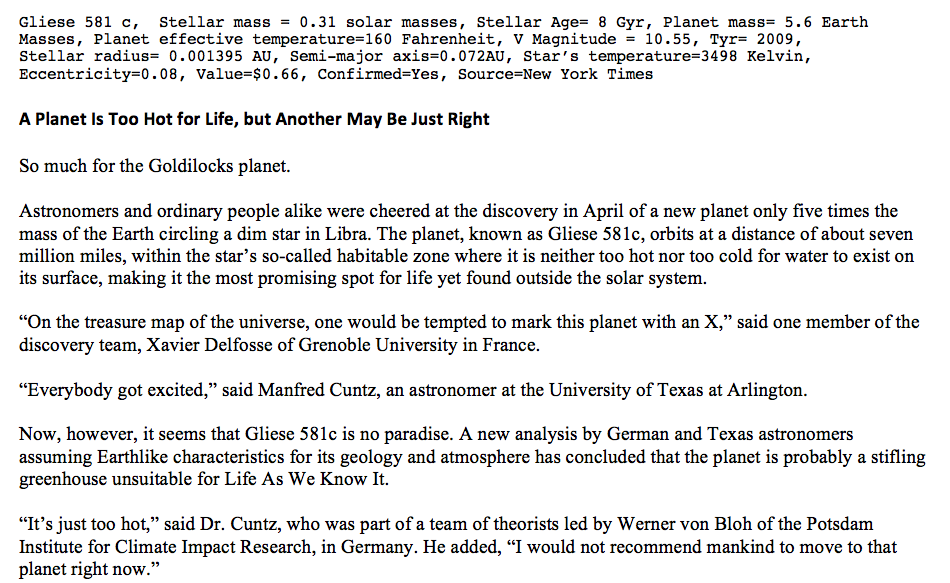

For many years, oklo.org was primarily devoted to extrasolar planets. Looking back through the archives, one can find various articles that I wrote about the new worlds as they arose. One can also look back at contemporary media reports of the then-latest planetary discoveries. Here’s a typical example from a decade ago, the beginning of an article written by Dennis Overbye for the New York Times.

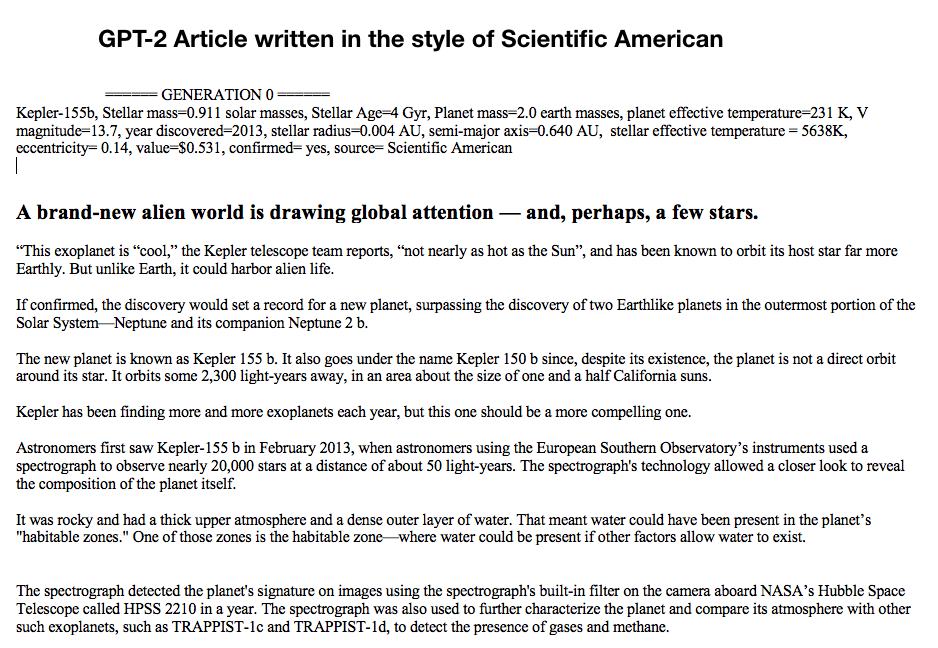

In collaboration with Simone Williams, a Yale undergraduate student, we scraped the media archives from the past two decades to assemble a library of news articles describing the discovery of potentially habitable extrasolar planets. Once all the articles were collected, we developed a consistent labeling schema, an example of which is shown just below. The Courier-font text is a summary “prompt” containing the characteristics of the planet being written about, as well as a record of the article’s source, while the Times-font text is the actual article describing an actual detected planet (with the title consistently bolded in san serif). In this case, it’s another piece by Dennis Overbye from 2007 reporting Gliese 581 c:

A benefit of the GPT series is that they are pre-trained. Fine tuning on the corpus of articles takes less than an hour using Google cloud GPUs.

And the result?

Here’s an imaginary article describing the discovery of a completely manufactured planet (albeit with a real name) with completely manufactured properties.

It’s definitely not perfect, but it’s also not that bad…