I keep thinking about the remarkable mapping of fruit fly brain. Those annoying pests darting above the sticky spill each have 139,255 brain cells with 50 million connections, and a computational throughput that’s naively similar to GPT-2, all while running much much closer to the Landauer limit, and costing negative dollars. Given the existence of such a set-up, it’s hard to shake off the feeling that the transformers are on the verge of being completely deprecated by some radical new algorithmic paradigm. But what’s it gonna be? Which direction is it going to come from?

So one sifts for clues. As an outsider, it’s tricky. Sure, the TED-talking charlatans are easy to spot. It’s not hard to discount the Deep-Learning equivalent of some astrobiologist going on about sampling microbes spewing out of Enceladus, or phosphine-emitting life on Venus, or detecting biosignatures on extrasolar planets in the habitable zone.

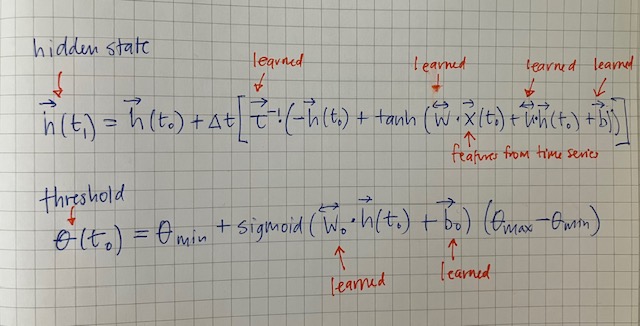

In this vein, I’m currently struggling to understand whether the Liquid Neural Networks are really the real deal or not. The various Bayesian priors exude radically conflicting signals. A mushy article in Quanta, “the driving forces behind the new design, realized years ago that C. elegans could be an ideal organism to use for figuring out how to make resilient neural networks that can accommodate surprise.” That definitely sounds like hype of the lets-look-for the-red-edge variety, but at the same time it’s true that C. Elegans pulls off quite a bit with its measly 302 neurons and 7000 synapses. Plus, bonus points for just using Euler’s method to integrate ODEs given the weird right-hand sides: