That ever-shifting fungibility between dollars, bit operations, and ergs has been a recurring theme here at oklo dot org for over a decade now — I think this was the first article on the topic, complete with a now-quaint, but then-breathless report of a Top-500 chart-topper capable of eking out 33.8 petaflop/s while drawing 17,808 kW. A single instance of Nvidia’s dope new B200 chip can churn out 20 petaflops (admittedly at grainy FP4 resolution) while drawing 1kW. “Amazing what they can do these days”.

Despite the efficiency gains, the sheer number of GPUs being manufactured is driving computational energy usage through the roof. There was a front-page article yesterday in the WSJ about deal-making surrounding nuclear-powered data centers. Straightforward extrapolations point toward Earth’s entire insolation budget being consumed within decades in the service of flipping bits. It thus seems likely that a lot will hinge on getting reversible computing to work at scale if there’s going to be economic growth on timescales beyond one to two decades.

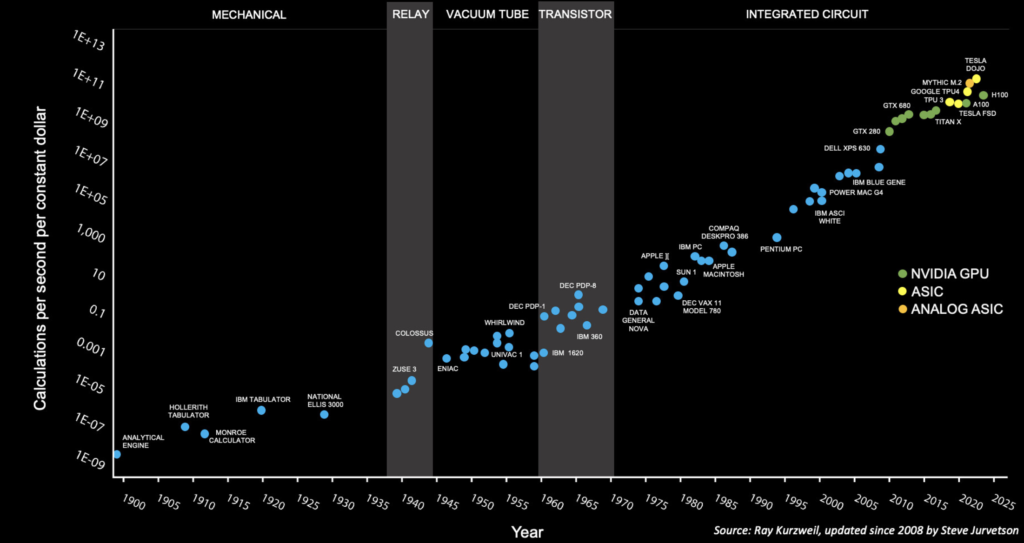

The Kurzweil-Jurvetson chart (copied just below) shows how computational cost efficiency is characterized by a double exponential trend. The Bitter Lesson, however, indicates that the really interesting breakthroughs hinge on massive computation. The result is that energy use outstrips the efficiency gains that themselves proceed at a pace of order a thousand-fold (or more) per decade. MSFT, NVDA, AAPL, AMZN, META, and GOOG are now the top-ranked firms by market capitalization.

This year, META (as an example) is operating 600,000 H100 equivalents in its data centers. Assuming a $40K cost for each one, that’s a $24B investment. Say the replacement life for this hardware is 3 years. That’s an $8B yearly cost. Assume 10 cents/kWh for electricity. META’s power bill is of order $60K/hour, or $0.5B/yr. Power is thus about 6% of the computational cost. The graph above doesn’t take the power bill explicitly into account because it hasn’t yet been material.

Nvidia’s H100s will be ceding their spots to the B200s and their equivalents over the coming year. Competition from AMD, Intel, et al. will likely keep META’s hardware cost roughly constant year-on-year, and their total number of bit operations will increase in accordance with the curve that runs through the points on the graph. The B200s, however, draw 40% more power. At the rate things are going, it will thus take about eight years for power costs to exceed hardware costs to run computation at scale.

This dense deck from Sandia National Laboratory seems like an interesting point of departure to start getting up to speed on reversible computing.