Time has a way of sliding by. More than five years ago, I wrote a blog post with a suggestion for a new cgs unit, the oklo, which describes the rate per unit mass of computation done by a given system:

1 oklo = 1 bit operation per gram per second

With limited time (and limited expertise) it can be tricky to size up the exact maximum performance in oklos of that new iPhone 12 that I’ve been eyeing. Benchmarking sites suggest that Apple’s A14 “Bionic” SoC runs at 824 32-bit Gflops, so (with Tim Cook in charge of the rounding) an iPhone runs at roughly a trillion oklos. Damn.

Computational energy efficiency also keeps improving. Proof-of-work based cryptocurrencies such as Bitcoin have laid the equivalency of power, money and bit operations into stark relief. The new Antminer S19 Pro computes SHA-256 hashes at an energy cost of about one erg per five million bit operations, a performance that’s only six million times worse than the Landauer limit. There is still tremendous opportunity for efficiency improvements, but the hard floor is starting to come into view.

For over a century, it’s been straightforward to write isn’t-it-amazing-what-they-can-do-these-days posts about the astonishing rate of technological progress.

Where a calculator like ENIAC today is equipped with 18,000 vacuum tubes and weighs 30 tons, computers in the future may have only 1000 vacuum tubes and perhaps weigh only 1½ tons.

And indeed, there’s a certain intellectual laziness associated with taking the present-day state-of-the-art and the projecting it forward into the future. Exponential growth in linear time has a way of making one’s misses seem unremarkable. “But I was only off by a few years!”

Fred Adams was visiting, and we were sitting around the kitchen table talking about the extremely distant future.

We started batting around the topic of computational growth. “Given the current rate of increase in of artificial irreversible computation, and given the steady increase in computational energy efficiency, how long will it be before the 1017 Watts that Earth gets from the Sun is all used in service of bit operations?”

As with many things exponential, the time scale is startlingly sooner than one might expect: about 90 years. In other words, the long-term trend of terrestrial economic growth will end within a lifetime. A computational energy crisis looms.

In any conversation along such lines, it’s a small step from Dyson Spheres to Kardashev Type N++. If you want to do a lot of device-based irreversible computation, what is the most effective strategy?

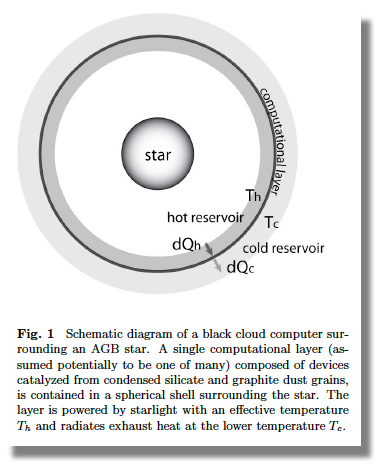

A remarkably attractive solution is to catalyze the outflow from a post-main-sequence star to produce a dynamically evolving wind-like structure that carries out computation. Extreme AGB (pre-planetary nebula phase) stars have lifetimes of order ten thousand years, generate thousands of solar luminosities, produce prodigious quantities of device-ready graphene, and have photospheres near room temperature.

We (Fred and I, along with Khaya Klanot and Darryl Seligman) wrote a working paper that goes into detail. In particular, the hydrodynamical solution that characterizes the structures is set forth. We’re posting it here for anyone interested in reading.

As we remark in the abstract, Possible evidence for past or extant structures may arise in presolar grains within primitive meteorites, or in the diffuse interstellar absorption bands, both of which could display anomalous entropy signatures.