How ’bout NVDA? Yesterday, at 4:20 PM ET, after the market close, the GPU manufacturer smoked the analysts expectations with a report of blow-out earnings. Fiscal fourth-quarter revenues clocked in at USD 22B, more than triple the earnings reported in fourth-quarter 2022.

In retrospect, given the excitement regarding generative AI, and given that Nvidia’s H100 chip has an outright unholy ability to push embeddings through attention blocks with mind-boggling rapidity, the gargantuan jump in profit seems in line with expectation. For me, at least, transformers really have been transformational.

CEO Jensen Huang was quoted on the call with a construction that caught my eye:

“If you assume that computers never get any faster, you might come to the conclusion we need 14 different planets and three different galaxies and four more suns to fuel all this,” Mr. Huang said. “But obviously computer architecture continues to advance.”

Jensen’s random.Generator.shuffle(x, axis=0) of the astronomical distance ladder brought Lenny Kravitz to mind:

I want to get away

I want to fly away

Yeah, yeah, yeah

Let’s go and see the stars

The Milky Way or even Mars

Where it could just be ours

Or even Mars. Object ordering aside, there’s an unmistakable mainstreaming afoot of oklo.org’s long-running preoccupation with the energy costs of computation as viewed with cosmic perspective. I like to riff on the Landauer limit, which puts a thermodynamic floor on the energy required to flip a bit, namely E=ln(2) k_B T, where k_B is the Boltzman constant. At room temperature, it takes more than 4.2e-14 ergs to imagine turning a zero into a one.

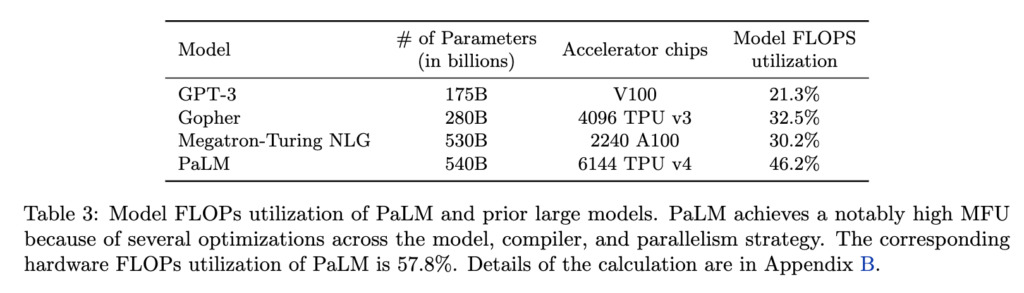

It’s exceedingly difficult to get GPU accelerators to run LLM inference workloads at theoretical performance. The PaLM paper has a table with some typical efficiencies:

Those utilization numbers are not for lack of effort. When training an LLM under the guidance of competent hands, an H100 is likely doing of order 10^15 bit operations per second, while drawing 700W. Nvidia is slated to produce 2 million H100s this year. Once they’re hooked up, they’ll be flipping about 10^15 x 3×10^7 x 2×10^6 ~ 10^29 bits per year (6e-7 oklo), while drawing 1.4GW, or 12 TWh, or 0.05% of global electricity usage. Seems like a small price to pay for a lot more of this.

The power demands of the 2024 crop of H100s will would require a square kilometer of full sunshine. Earth’s cross sectional area presents about 30 million square kilometers to the Sun, so Huang’s assessment seems pretty fair.

But what about those fourteen planets, three galaxies and four suns? Seems like a good opportunity to trot out the Black Clouds. Stay tuned….