For a number of years now, I’ve been a member of an academic collaboration devoted both to studying Internet latency and to designing schemes to generally speed things up on-line. At the end of 2018, our group received an NSF grant to facilitate this research work. Now, three years later, it’s time to submit the final report. As part of the NSF’s close-out process, an accessible research outcomes summary for the general public (containing up to 800 words, and including up to six images) is required. This sounds like a spec list for an oklo.org item, so I’m posting a slightly expanded draft version here.

Everyone knows the frustration of a page that seems to take forever to load. Moreover, even when the interlaced networks that comprise the Web function nominally to deliver requested assets, there exist Internet applications that would benefit dramatically from reduced latency. Examples of such time-sensitive endeavors run the gamut from telepresence and virtual reality to header bidding and blockchain propagation.

At a fundamental level, network speeds are limited by the finite velocity of electromagnetic waves — the speed of light. The maximum propagation speed for light occurs in vacuum. Light is slowed down in air by a negligible amount (0.03%), but in the conventional solid-core fiber optic cables that currently carry the bulk of Internet traffic, light travels at only about 2/3 of the vacuum maximum. Over long distances, and when many back-and-forth exchanges of information are required, this signaling slowdown becomes material. In addition, the actual over-land and under-sea paths taken by cables are often substantially longer than the minimum A to B distance between data centers.

Over the last decade, there has been a flurry of construction of long-haul line-of-sight microwave networks that adhere as closely as possible to great-circle paths. These are operated by latency-sensitive trading firms, who, in aggregate, have mounted significant research and development projects to create global information circuits that are as fast as possible, while simultaneously maximizing bandwidth and uptime.

How feasible would it be to take the lessons learned and apply them at scale to speed up the Internet as a whole? This is a tricky question to answer because the fastest existing long-distance networks were entirely privately funded and their performance remains fully proprietary. Just how fast are they, really? How well do they hold up when weather moves through? How much data can they carry?

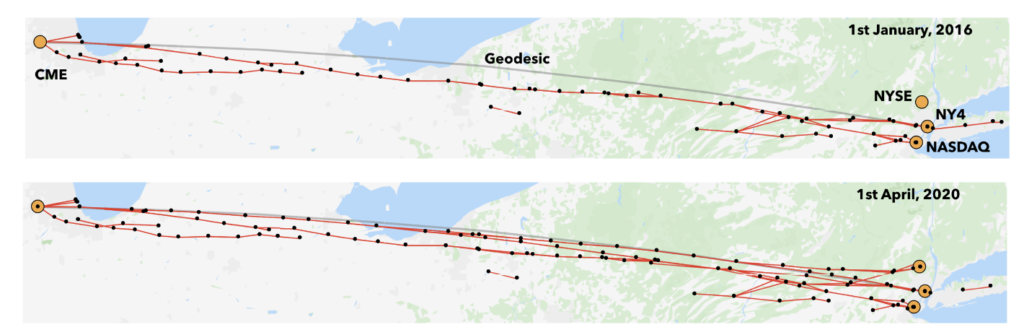

Government database scraping provides a first approach to evaluate the performance of the ultra-low latency networks. In the US, if one wishes to communicate with microwaves, one needs a broadcast license. The FCC maintains a publicly-searchable list of all licenses and licensees, and this data can be assembled to monitor the construction, consolidation, and improvement of point-to-point networks. The figure just below, from our 2020 paper, shows two snapshots in the evolution of the New Line Network, which connects the CME data center located in Aurora, Illinois to the trading centers of suburban New Jersey. Over time, the New Line has clearly grown to provide ever more bandwidth at ever higher availability with a shortest path that adheres ever more closely to the geodesic.

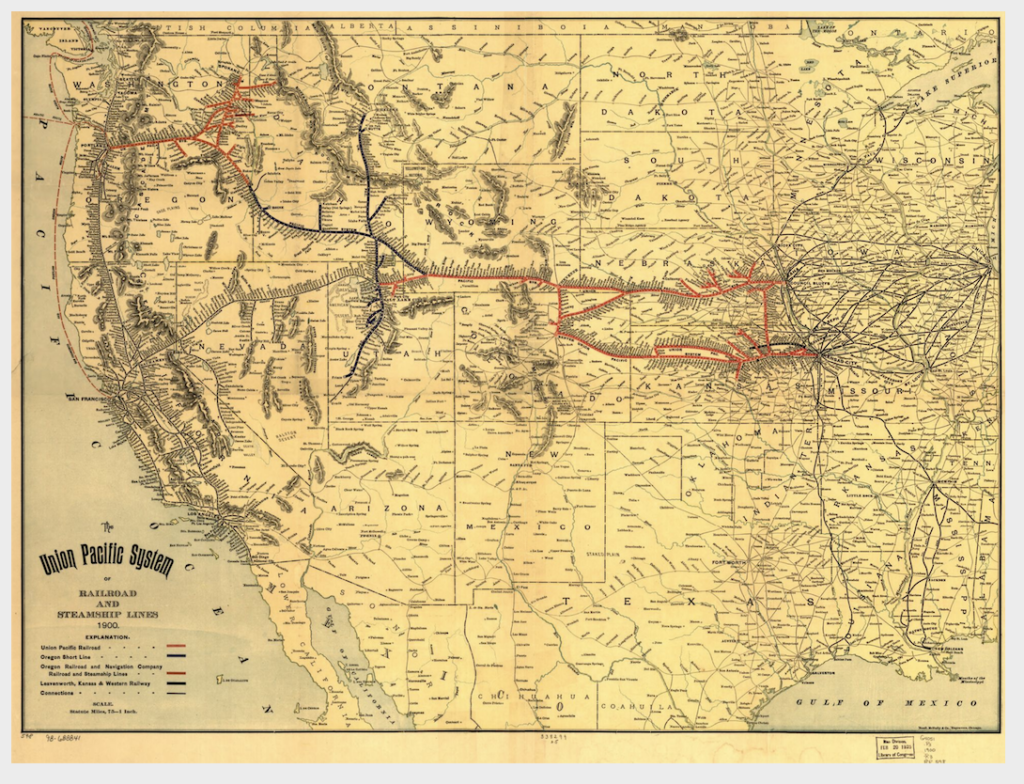

The development and build-out of speed-of-light networks has significant parallels with the emergence of transcontinental railroads during the Nineteenth Century.

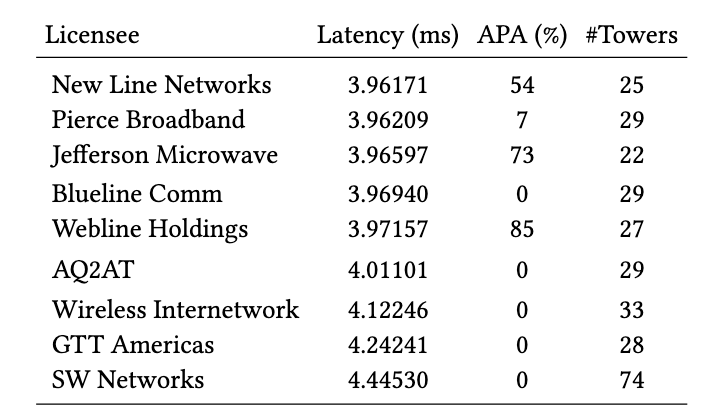

In April of 2020, in the licensed microwave bands, there were nine separate FCC-registered networks spanning the Chicago to New Jersey Corridor and linking the CME to the NY4 data center that hosts a variety of equity and options exchanges. The New Line, with a 3.96171 millisecond path-latency (compared to a geodesic minimum latency of 3.955 ms) is seen to be neck-and-neck with several competitors:

In the above table, APA stands for Alternate Path Availability, and indicates the fraction of links that can be removed (for example by heavy rain) such that the path latency of the remaining network is not more than 5% greater than the speed-of-light minimum.

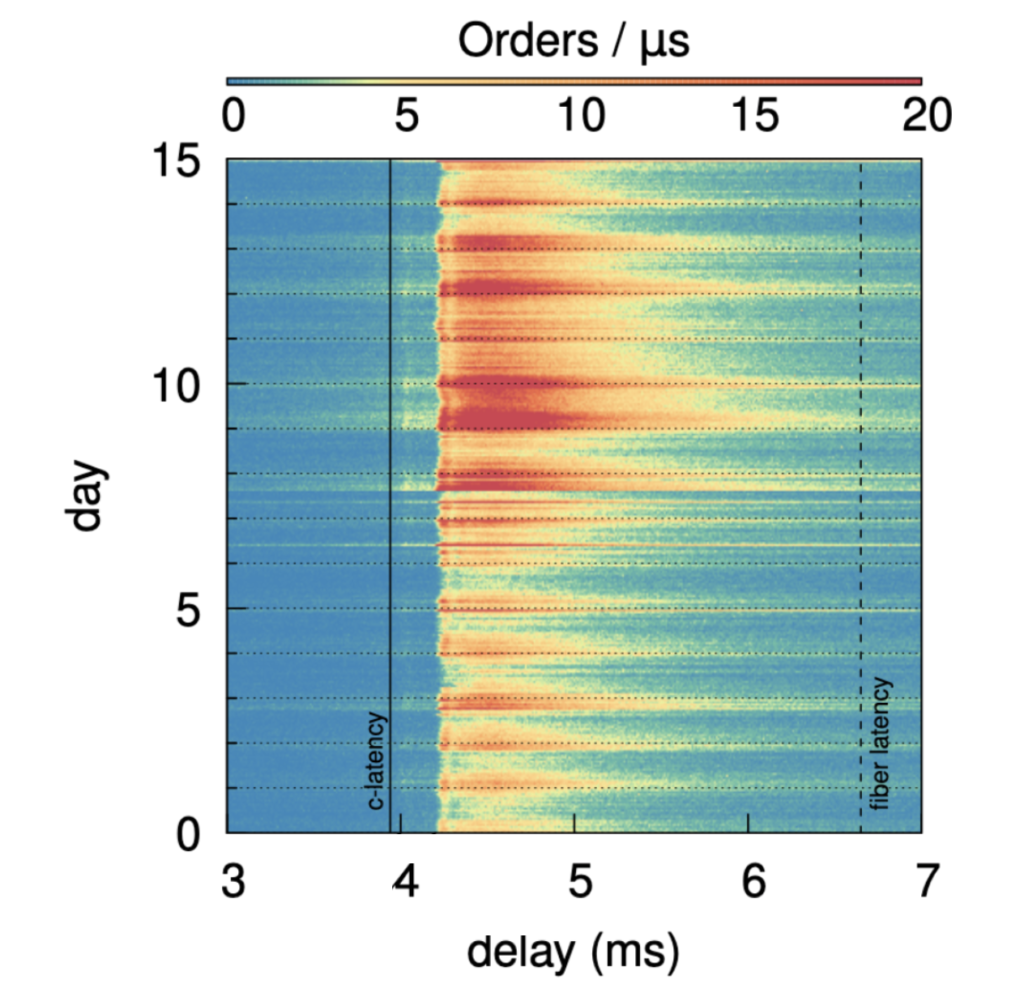

A completely independent monitoring technique consists of correlating precisely time-stamped trading data from Chicago and New Jersey, and measuring the statistical delay between events that occur at one end of the network, and the responses that occur at the other end. As part of the capstone paper for our NSF-funded research, we undertook this analysis using gigabytes of tick data for the E-mini S&P500 near-month futures contract (that trades in Illinois) and the SPY S&P500 ETF (that trades in New Jersey). In work of this type, there are subtle issues associated with measuring the absolute lowest latencies at which information transport occurs across the relay; these subtleties stem from the operational details of the exchange matching engines. For the purpose, however, of demonstrating that the networks consistently run end-to-end within a few percent of the physical limit, even during periods plagued by heavy weather, the signal correlations measured over long stretches of trading provide a remarkably powerful network probe.

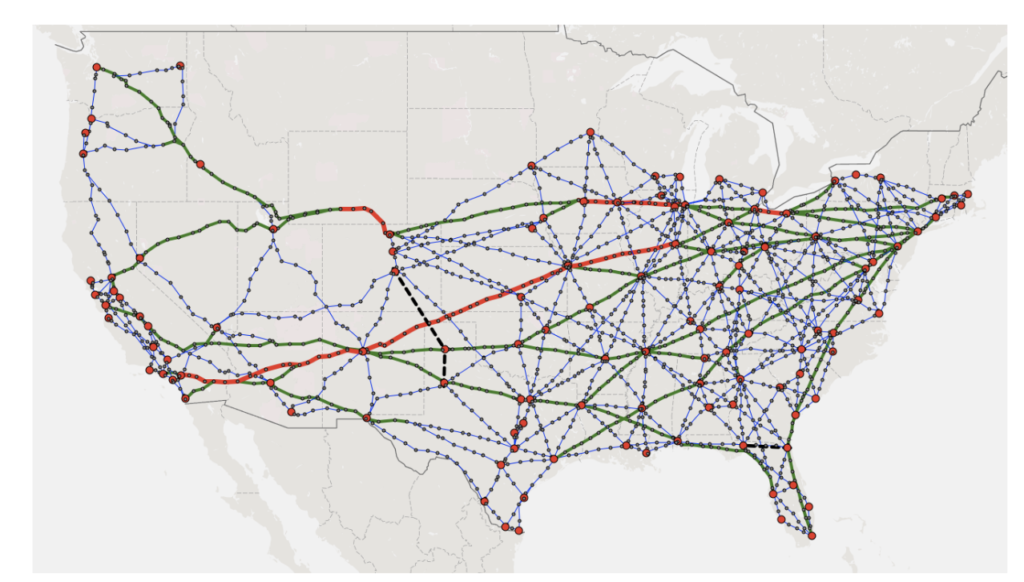

By taking these (and other) real-world insights into account, and applying them to a transcontinental network design, we’re excited to release — at the 19th USENIX Symposium on Networked Systems Design and Implementation Conference — our most up-to-date vision of what a speed-of-light Internet service provision (a c-ISP) could look like, and what its performance would be.