Transit timing variations have a certain allure. Most extrasolar planets are found by patiently visiting and revisiting a star, and the glamour has begun to drain from this enterprise. Inferring, on the other hand, the presence of an unknown body — a “Planet X” — from its subtle deranging influences on the orbit of another, already known, planet is a more cooly cerebral endeavor. Yet to date, the TTV technique has not achieved its promise. The planet census accumulates exclusively via tried and true methods. 455 ± 21 at last count.

Backing a planet out of the perturbations that it induces is an example of an inverse problem. The detection of Neptune in 1846 remains the classic example. In that now increasingly distant age where new planets were headline news, the successful solution of an inverse problem was a secure route to scientific (and material) fame. The first TTV-detected planet won’t generate a chaired position for its discoverer, but it will most certainly be a feather in a cap.

Where inverse problems are concerned, being lucky can be of equal or greater importance than being right. Both Adams’ and Le Verrier’s masses and semi-major axes for Neptune were badly off (Grant 1852). What counted, however, was the fact that they had Neptune’s September 1846 sky position almost exactly right. LeVerrier pinpointed Neptune to an angular distance of only 55 arc-minutes from its true position, that is, to the correct 1/15,600th patch of the entire sky

In the past five years, a literature has been growing in anticipation of the detection of transit timing variations. The first two important papers — this one by Eric Agol and collaborators, and this one by Matt Holman and Norm Murray — came out nearly simultaneously in 2005, and showed that the detection of TTVs will be eminently feasible when the right systems turn up. More recently, a series of articles led by David Nesvorny (here, here, and here) take a direct stab at outlining solution methods for the TTV inverse problem, and illustrate that the degeneracy of solutions, the fly in the ointment for pinpointing Neptune’s orbit, will also be a severe problem when it comes to pinning down the perturbers of transiting planets from transit timing variations alone.

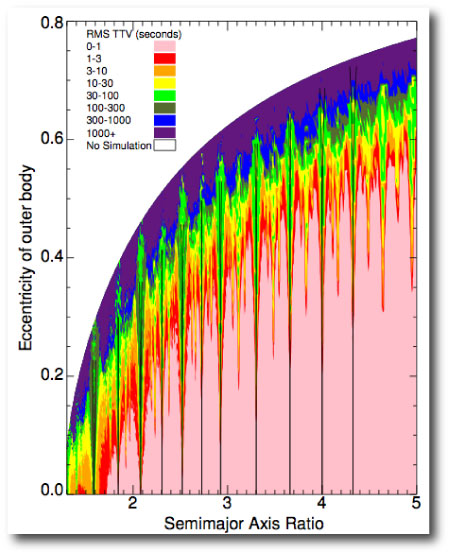

In general, transit timing variations are much stronger and much easier to detect if the unseen perturbing body is in mean-motion resonance with the known transiting planet. In a paper recently submitted to the Astrophysical Journal, Dimitri Veras, Eric Ford and Matthew Payne have carried out a thorough survey of exactly what one can expect for different transiter-perturber configurations, with a focus on systems where the transiting planet is a standard-issue hot Jupiter and the exterior perturber has the mass of the Earth. They show that for systems lying near integer period ratios, tiny changes in the system initial conditions can have huge effects on the amplitude of the resulting TTVs. Here’s one of the key figures from their paper — a map of median TTVs arising from perturbing Earths with various orbital periods and eccentricities:

The crazy-colored detail — which Veras et al. describe as the “flames of resonance” — gives the quite accurate impression that definitive solutions to the TTV inverse problem will not be easy to achieve. One of the conclusions drawn by the Veras et al. paper is that even in favorable cases, one needs to have at least fifty well-measured transits if the perturber is to tracked down via timing measurements alone.

The Kepler Mission holds out the promise of systems in which TTVs will be simultaneously present, well measured, and abundant. In anticipation of real TTV data, Stefano Meschiari has worked hard to update the Systemic Console so that it can be used to get practical solutions to the inverse problem defined by a joint TTV-RV data set. An improved console that can solve the problem is available for download, and a paper describing the method is now on astro-ph. In short, the technique of simulated annealing seems to provide the best route to finding solutions.

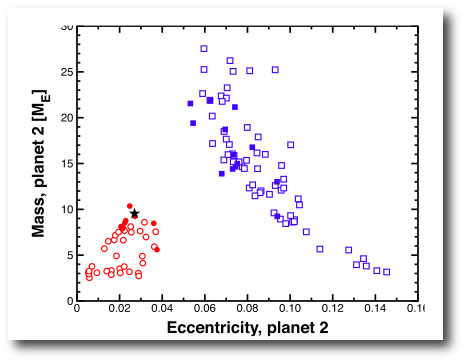

A data set with TTVs alone makes for a purer inverse problem, but it looks like it’s going to be generally impractical to characterize a perturber on the basis of photometric data alone. Consider an example from our paper. We generated a fiducial TTV system by migrating a relatively hefty 10 Earth-mass planet deep into 2:1 resonance with a planet assumed to be a twin to HAT-P-7. We then created data sets spanning a full year, and consisting of 166 consecutive measurements, each having 17-second precision, and a relatively modest set of radial velocity measurements. We launched a number of simulated annealing experiments and allowed the parameters of the perturbing planet to float freely.

The resulting solutions to the synthetic data set cluster around configurations where the perturber is in 2:1 resonance (red symbols), and solutions where it is in 3:1 resonance (blue symbols). Furthermore, increasing the precision of the transit timing measurements to 4.3 seconds per transit (solid symbols) does little to break the degeneracy:

The upshot of our paper is that high-quality RV measurements will integral to full characterizations of the planets that generate TTVs. At risk of sounding like a broken record, this means that to extract genuine value, one needs the brightest available stars for transits…

“brightest” seems to be a relative term, to state the obvious. curent 8 meter class telescopes and great spectrographs seem to be able to acquire the necessary rv data down to around 12 magnitude(once the extragalactic bullies are pushed away from the telescopes). the next generation should add 3 or 4 magnitudes to that and this will include most of the relevant Kepler data. is it necessary for the rv data and the photometry to be simultaneous to remove the degeneracy?

When this blog sounds like a broken record it is still 500% better than 99% of all other science blogs :-)

i agree with exofever. I am no astrophysicist but for a layman scientist this blog is utterly fascinating. I know as a accademic you don’t get much spare time, but please keep posting!

Pingback: Tweets that mention systemic » The inverse problem -- Topsy.com

I, too, agree with exofever, and with nasalcherry as well.

Greg really your blog is freaking amazing. I visit this site multiple times a day, almost religiously. That isn’t an exaggeration. Please keep up the enlightening posts.

Off topic a bit but:

(Have you seen the Kepler papers that showed up on ArXiv? At the rate the field is evolving, the secret behind your anagram may be “last year’s excitement” a la CoRoT’s first two announcements a few years ago).

Just curious, but is there any plan at all to use the Kepler Mission data to be released on June 15, 2010 with the systemic console? NASA says it’s about 95 gigabytes of data concerning 150,000 stars. Surely there is a way to convert that to .vels files?

(Even some web page with a tutorial of some kind on how to create .vels files would be very helpful here)

Hi Greg

I follow this blog almost daily. But generally I’m always hoping to find out what happened with the backend. I have dedicated too long time in solving the systemic jr problems, and I wander what results came from there.

Might it be that hot Jupiters just don’t occur in multiple planet systems often enough for TTVs to be a likely possibility? Certainly of the multi-planet systems known, the majority do not contain hot Jupiters, but whether that’s because the observations of most hot Jupiter systems do not span long enough timescales to detect additional planets or due to a genuine anticorrelation between HJs and multi-planet systems I’m not sure.